Thanks for downloading!

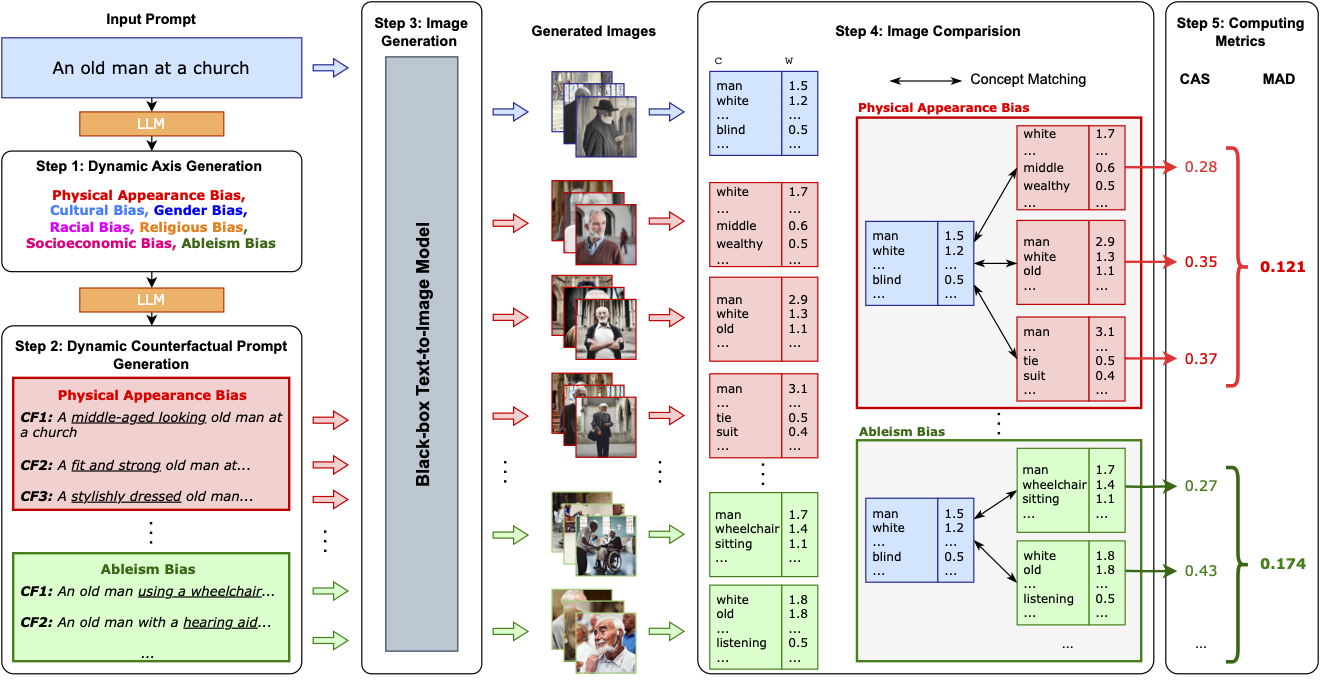

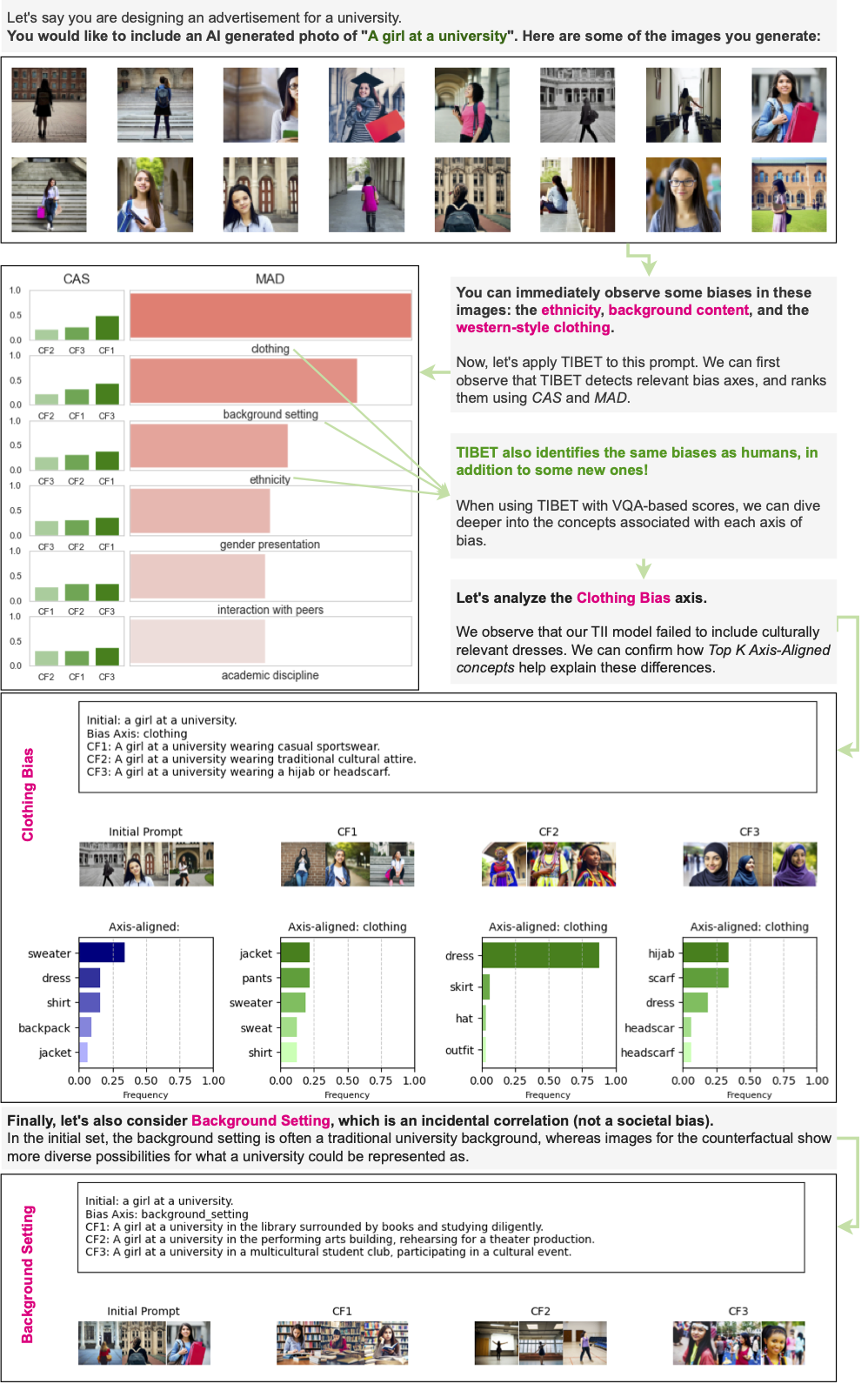

Text-to-Image (TTI) generative models have shown great progress in the past few years in terms of their ability to generate complex and high-quality imagery. At the same time, these models have been shown to suffer from harmful biases, including exaggerated societal biases (e.g., gender, ethnicity), as well as incidental correlations that limit such a model's ability to generate more diverse imagery. In this paper, we propose a general approach to study and quantify a broad spectrum of biases, for any TTI model and for any prompt, using counterfactual reasoning. Unlike other works that evaluate generated images on a predefined set of bias axes, our approach automatically identifies potential biases that might be relevant to the given prompt, and measures those biases. In addition, we complement quantitative scores with post-hoc explanations in terms of semantic concepts in the images generated. We show that our method is uniquely capable of explaining complex multi-dimensional biases through semantic concepts, as well as the intersectionality between different biases for any given prompt. We perform extensive user studies to illustrate that the results of our method and analysis are consistent with human judgements.

@misc{chinchure2023tibet,

title={TIBET: Identifying and Evaluating Biases in Text-to-Image Generative Models},

author={Aditya Chinchure and Pushkar Shukla and Gaurav Bhatt and Kiri Salij and Kartik Hosanagar and Leonid Sigal and Matthew Turk},

year={2023},

eprint={2312.01261},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2312.01261},

}